Compuverde vNAS enables university to consolidate storage silos into one unified storage platform

OWL UAS, Hochschule Ostwestfalen-Lippe, University of Applied Sciences, is a state tech university in Germany that carries out

research and higher education.

With 6,700 students, 174 professors and 527 employees, OWL UAS‘ goal is to tackle complex problems and global challenges to ensure that knowledge and innovations benefit society.

In the field of research and technology, OWL UAS has three main profile areas: life science technology, energy research and industrial IT.

The Institute for Energy Research (iFE) is an interdisciplinary research institute with the goal to develop efficient, networked and innovative solutions for sustainable energy systems.

The Institute Industrial IT (InIT) is located in a science-to-business center (Centrum Industrial IT) on the Lemgo Innovation Campus,

where more than 200 engineers from companies and research institutes carry out research in the field of industrial informatics and industrial automation for cyber-physical systems.

They provide education and research in engineering, economics and management, life science technologies, design and construction at campuses in Lemgo, Detmold and Höxter.

Challenge

Migrate all the university’s data onto one single storage pool to improve service, administration, backup and disaster recovery

The university had 70 NASes spread across a myriad of small storage islands. In addition, there was a metro cluster storage solution that was starting to lack support and was even more expensive to maintain and update. The NetApp metro cluster, with two heads (3240) — some shelves with SSDs, SAS and SATA disks — was running six virtual filers. It was much too complex to administrate, so the university aimed for a unified storage solution that covered all their needs.

Dipl.-Ing. Martin Hierling said,

“We are in research and education, where everybody can do what they want.

In German we call that ’Freiheit von Forschung und Lehre’ (freedom of research and education).”

There are 7,000 IP addresses, 8,000 network ports, about 5,000 hosts — such as printers, laptops, workstations and servers — and about 500 access points with 4,000 mobile devices per day. There are 12 AD domains on the university, where the main campus runs three and the other departments run the other nine. The network is segmented into approximately 180 different networks. Every department and facility in three different locations has between one and five network segments where they run their own equipment.

The main business services are centralized: identity and resource management, groupware, VoIP, web and mail server, network and firewall. But in the important area of storage and directory services, there was a completely different picture. There was no central and secured storage for all the important scientific data.

The goal became to find one storage solution to replace everything, one that is highly scalable and with a global file system that could span all the campuses, and to secure all data with fault protection and against data loss. There had to be a plan for centralized backup and disaster recovery, as well.

The project aimed to minimize administration and to make capacity extensions easy and flexible, and also to ensure decoupling of hardware and storage

functionality and to reduce costs.

One unified and scalable storage solution would ensure reduction of overhead, administration and maintenance and improve the quality of backup and disaster recovery.

Dr. Lars Köller, director S(kim) said,

“The main goal of the proposal to our Ministry of Arts and Sciences was the consolidation of the server, storage and active directory infrastructure at OWL UAS.”

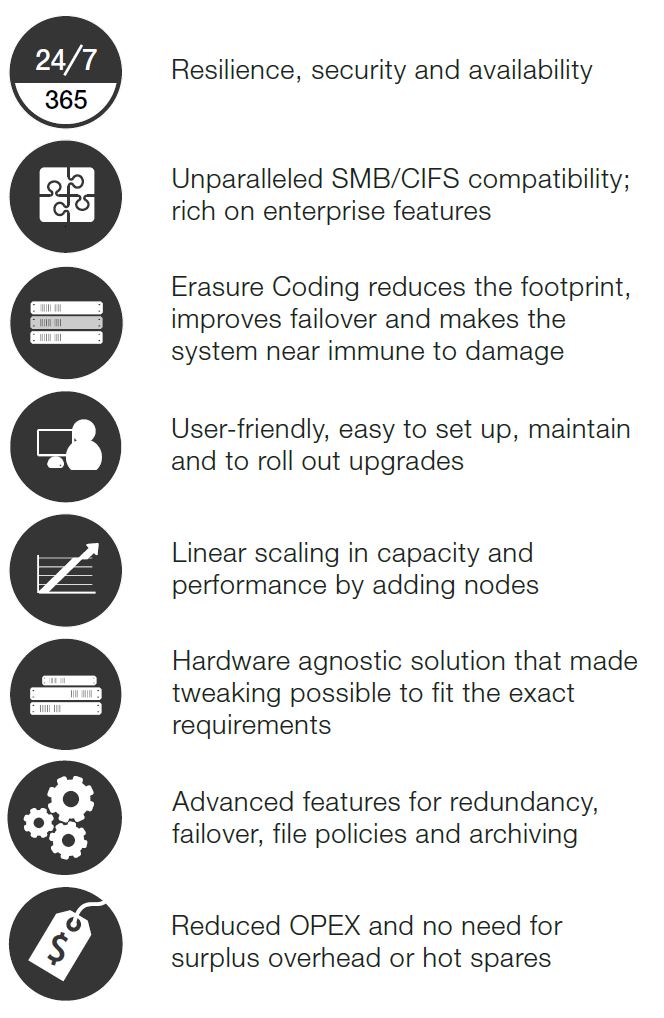

System integrator EUROstor was able to present Compuverde softwaredefined storage due to its flexibility and hardwareagnostic approach, along with important enterprise features like multitenancy, AD support, tiering, snap– shots, backup support, metro cluster, async replication and possibilities for disaster recovery.

Native implementation of protocols including SMB/CIFS and NFS, with no plug-ins or third-party software, ensures compatibility and absolute consistency between protocols and access points. And with no master node or heads, there are no expensive bottlenecks to take into account.

“Three different solutions were evaluated: Compuverde, NetApp and IBM Spectrum Scale,”

Dr. Köller explained.

“Compared to the alternatives, Compuverde offered a lot of advantages and possibilities. The main point is how easy that storage runs, how resilient it is against failure, data loss and power loss, and the protocol compatibility in a heterogeneous university client environment.”

As proof of concept, initial tests were carried out to demonstrate that the storage solution, together with HP hardware, were able to handle all that was needed.

Hierling said: “The support team has been very helpful with all the test cases, and all tests showed that Compuverde can handle what we need. It is easy to run, has a really high Microsoft compatibility and multitenancy, runs on standard hardware and is endlessly scalable.”

Solution and Benefits

With the Compuverde solution and multitenancy, 12 AD domains are present on the university as a whole. Since the same hardware is used as access points and storage for all domains, only one shared amount of overhead is necessary for each campus, and there are only one system and three storage clusters to administer.

The goal is to centralize everything and migrate over to Compuverde storage. “One huge part is all the storage. Another is VMware,” Hierling said. “We scanned the entire network for Synology Stations and found about 50 boxes. We didn’t scan for Qnap, Western Digital and all the other NAS systems, but we want them ALL on our Compuverde Cluster. That is the plan.”

The flexibility is a key strength.

“We have a broad variety of users, IoT devices, VMware, data mining and more, and we put them all on the Compuverde solution,” Hierling said. “CIFS/SMB and NFS are used for user and webserver shares. In addition, we are testing S3 and NFS for VMware. There are some expensive storage for VMware and also a bunch of large 4-8 TB virtual machines that we have moved to one of the Compuverde clusters. This storage solution has been tested now for about a year. We run one side of our new Microsoft Exchange Cluster inside VMware on top of the Compuverde NFS.”

The Compuverde scale-out vNAS storage solution is easy to run, requires very little maintenance and interaction, and includes nearly endless possibilities for future scalability. For hardware, HP servers were chosen, with SSD and spinning disks, no RAID, and with Cisco Nexus infrastructure. In this first phase, three campuses are deployed; Lemgo is equippedwith a 10-node cluster and two other campuses – Detmold and Höxter – have one five-node cluster each. That’s three storage clusters in all. Next, an additional 192 TB of storage are being added to the main cluster.

In the future, basically any standardized hardware can be added, such as disks, nodes and infrastructure, so that each piece of hardware will take its share of the total load, automatically balancing with the existing hardware and improving performance, IOPS and capacity further.

The Solution consists of the following hardware for each node (20 nodes in total)

| Hardware Setup | |

|---|---|

| Number of nodes | 3 clusters, 20 nodes |

| CPU | Intel® Xeon Gold 5115 8-core |

| RAM | 128 GB per node |

| Cache | 2 x 1.6 TB SSD |

| Storage drives | 7 x 6 TB SATA |

| Controller | HP 816i No RAID No cache |

| NIC | 2 x 10GBit/s and 4 x 10GBit/s |

| Switches | Cisco Nexus |

This gives a total of 840 TB of raw storage. Using 5+2 erasure coding and a storage efficiency of 71 percent, any two nodes are allowed to fail without service failure.

This gives a total of 840 TB of raw storage. Using 5+2 erasure coding and a storage efficiency of 71 percent, any two nodes are allowed to fail without service failure.

Having identical hardware for all nodes and all locations helps make it even simpler to deploy and administer.

The identical hardware makes each cluster and each node balance well out-of-the box so that each node and each disk handle an equal amount of work and data, optimizing parallelism in the solution.

Benefits and Future

OWL UAS is migrating from six NetApp vFilers and 70 NAS devices to Compuverde. The NDMP protocol is used for automated three-way backup directly from the storage cluster. The plan is to connect antivirus servers to have automatic virus scanning performed in the background.

Support is exceeding the expectations. “Support is concerned about things we have problems with, or features that we need, and we are also involved in testing the new features,” said Hierling. When the time comes and the cluster needs to be extended, there is no hassle. “We only buy nodes and licenses, and we are ready.”

„Based on our one-year experience with the new infrastructure and unified storage, we estimate that the Compuverde storage solution lower our administration effort by a factor of three,” Dr. Köller said.

Future

Next, OWL UAS is expanding Compuverde storage to another site, the new and innovative degree program of Precision Farming, where they are planning an experimental farm with automation and new processes for agriculture. The farm will conduct field experiments on minimizing the use of resources and optimizing the yield. This farm will be equipped with computing, network, wlan, VMware and HCI hyperconverged storage. HCI was a solution that fit well in the vNAS infrastructure.

Anticipating Compuverde’s forthcoming Hybrid Cloud or Async Replication feature, it will be possible to connect and share data and services across campuses in a much more automated way, without adding any new hardware. As each campus is in a separate location, it will be possible to use spare overhead for each campus to keep copies of data and move freely between campuses in case of any disaster. In the future, with Hybrid Cloud, it will be possible to access and update all or any important scientific data from any campus in real time. And should anything go wrong, the services will be available from the next campus.